Updates Oct 21

PGrid

- Wired up the cpu details page table to list individual cpus. Required a little refactoring to reduce code duplication.

- More refactoring, creating shared prisma queries for cpus. Deduplicates a few queries, less chance of bugs. Useful to have all the queries in one place to reuse one query in others.

- Figured out that the random too cheap ebay prices are from 1 usa based instance that did ebay scraping. Usually it would get AUD prices but sometimes ebay randomly converted prices to USD. removed that instance from doing scraping till i have proxies or currency handling set up.

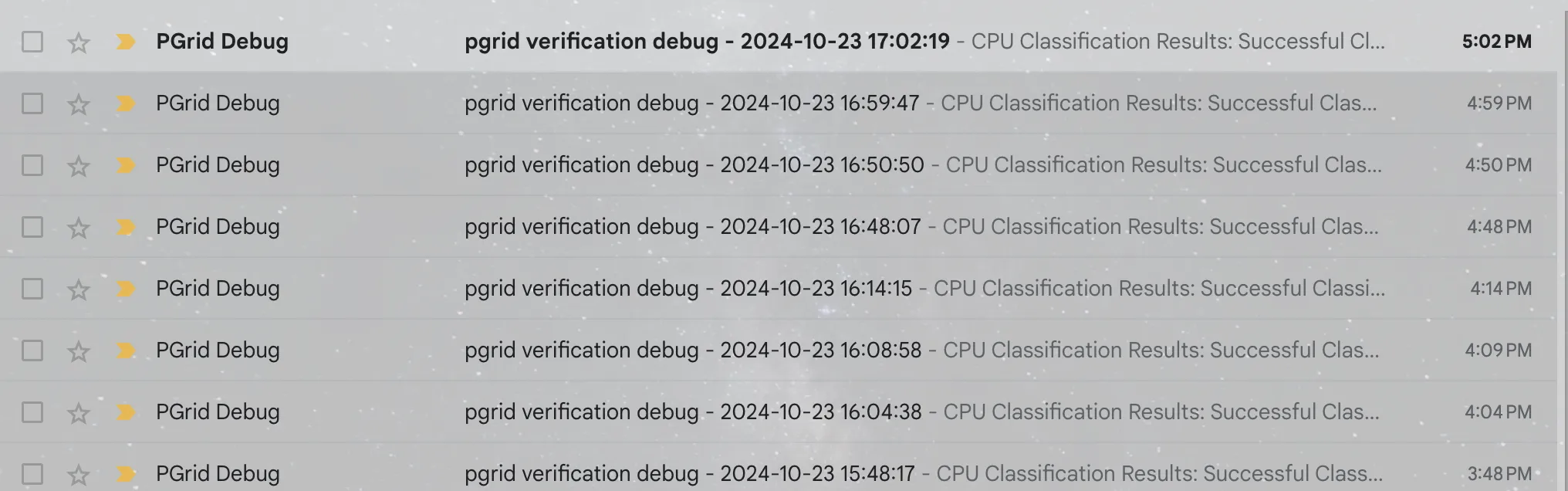

- More experimenting on cpu classification functionality. Final iteration at the moment is using a cheaper model to do an initial classification of 50 cpus at a time feeding it all current cpus models in the database. Then getting claude sonnet to review that classification and only give outputs for classifications that were incorrect. Useful in 2 ways as I believe getting multiple models to do classification is better than 1 even if 1 of the models is a lower spec model. and since claude is kinda expensive for output tokens, if it only outputs when there is a required change it keeps costs pretty low. Then there is a debug email after classification so I have a log of what has been done and can manually check.

- While classifications seems ok for now, I need to implement a method for handling when a listing doesn’t get classified to a cpu. Either because I dont have that cpu model in the db yet or because the listing isnt a cpu or handle some other reason.

- CPU table and details pages live on pgrid. Only listings from 3 sources, limited price history and no email price alert functionality yet.

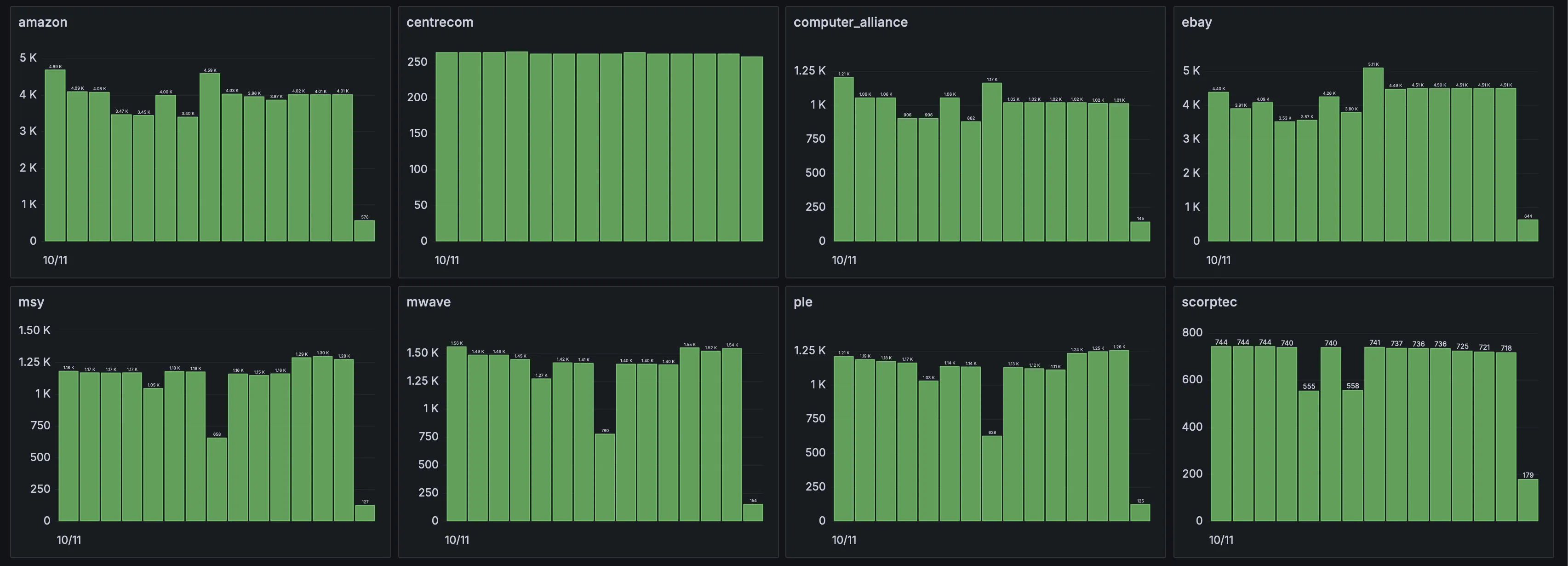

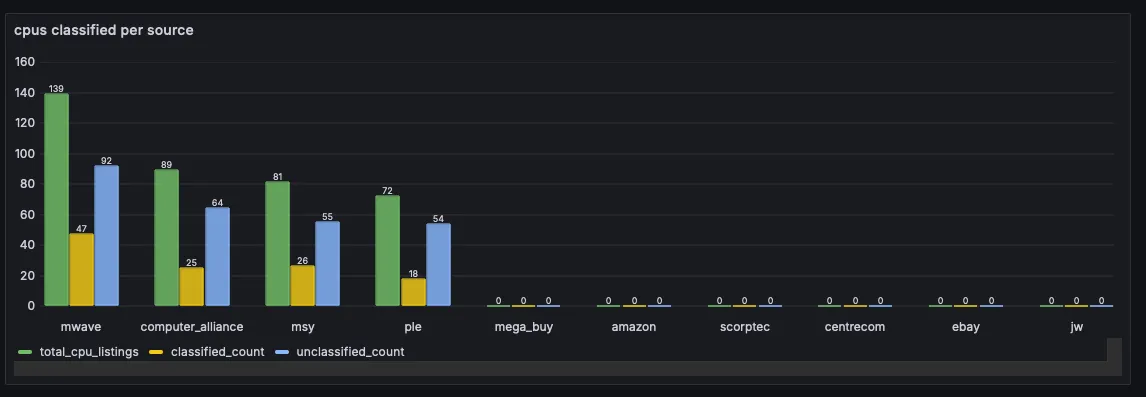

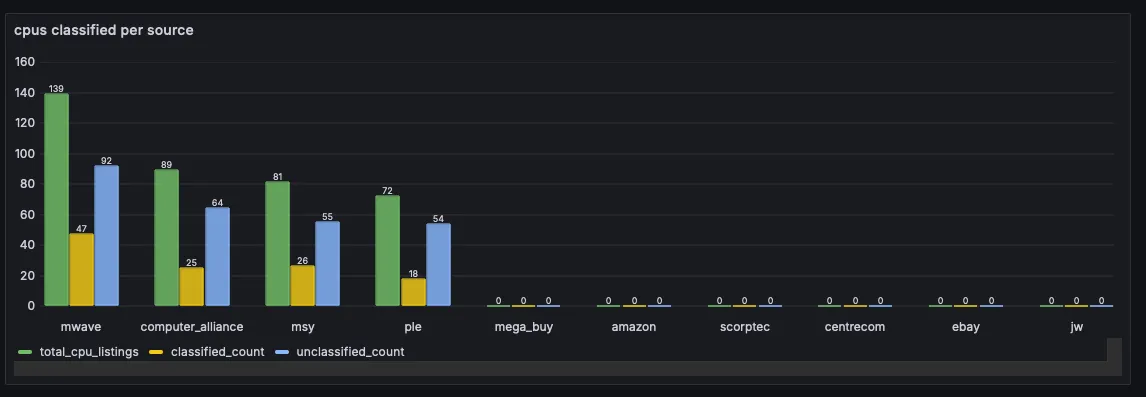

- Added some more graphs on grafana to show amount of CPUs classified and not.

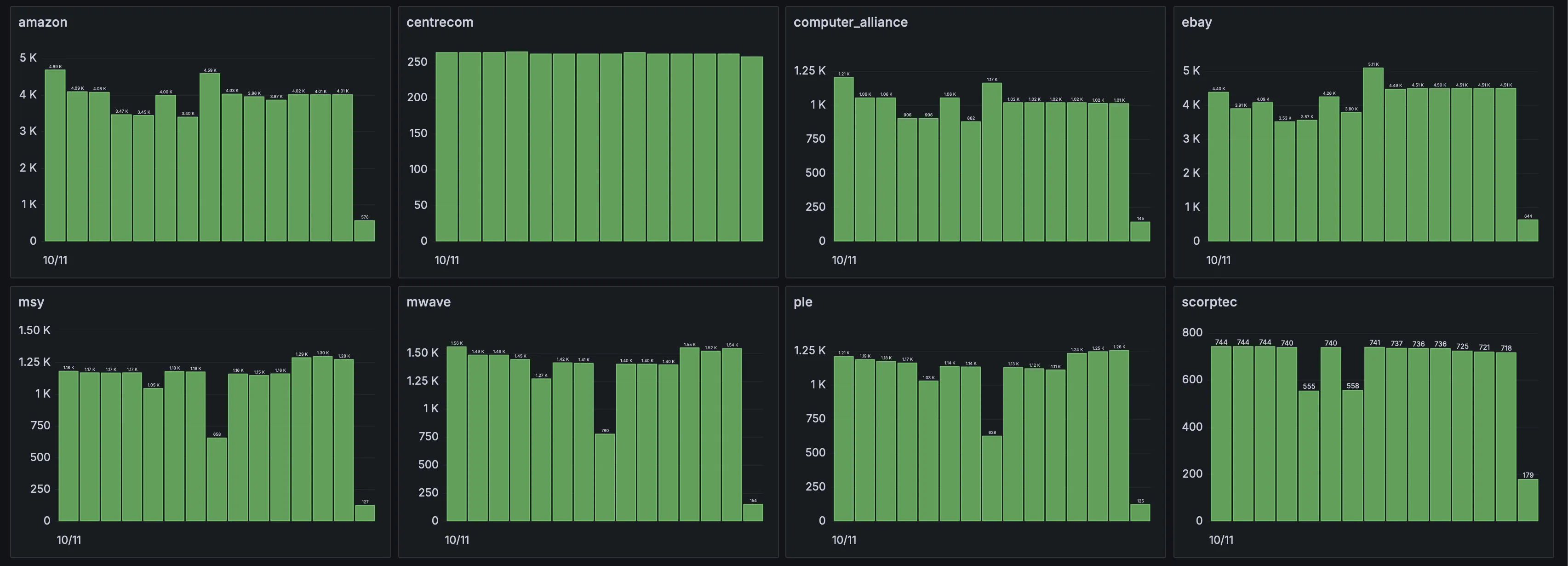

and GPU snapshots per source per day

and GPU snapshots per source per day

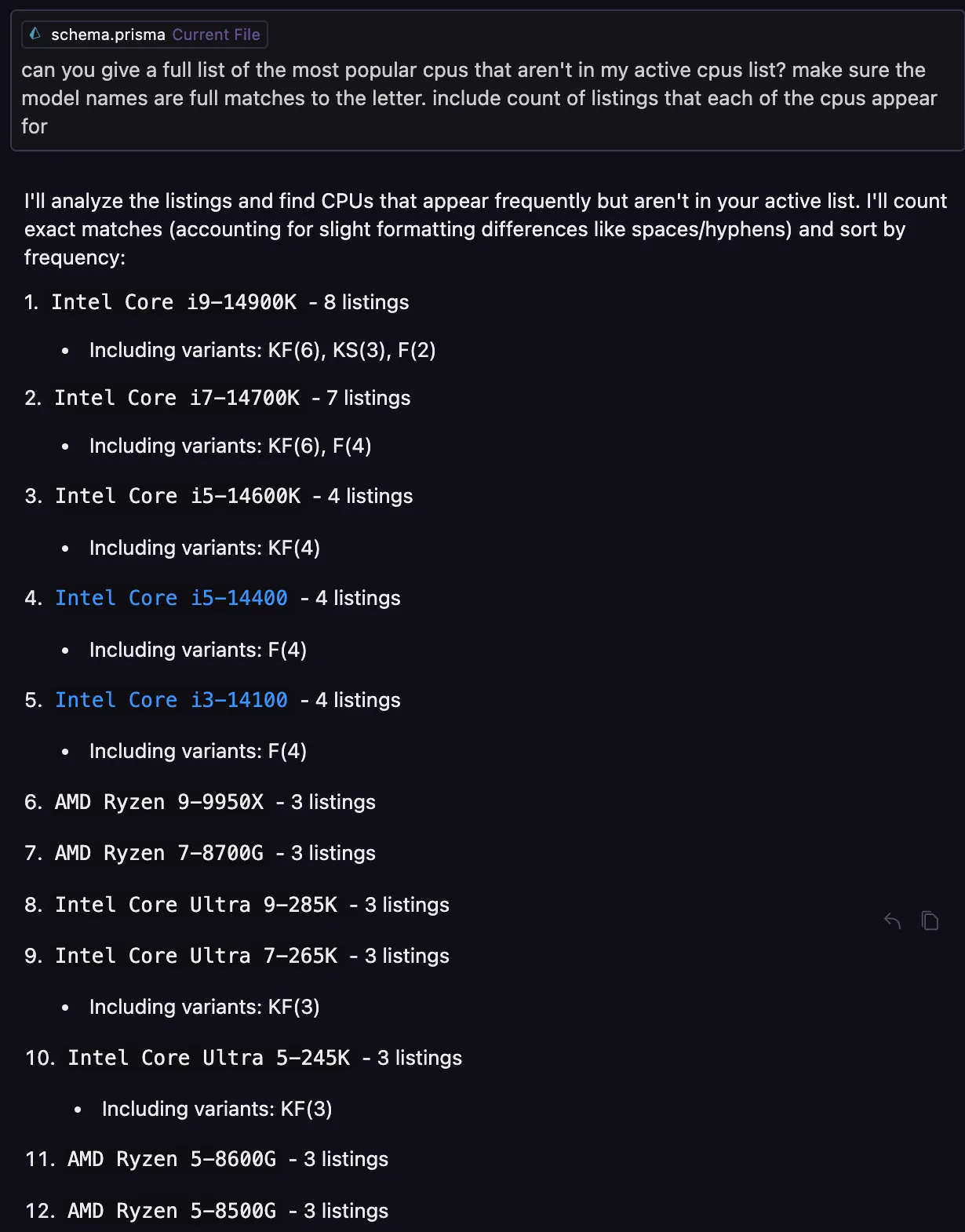

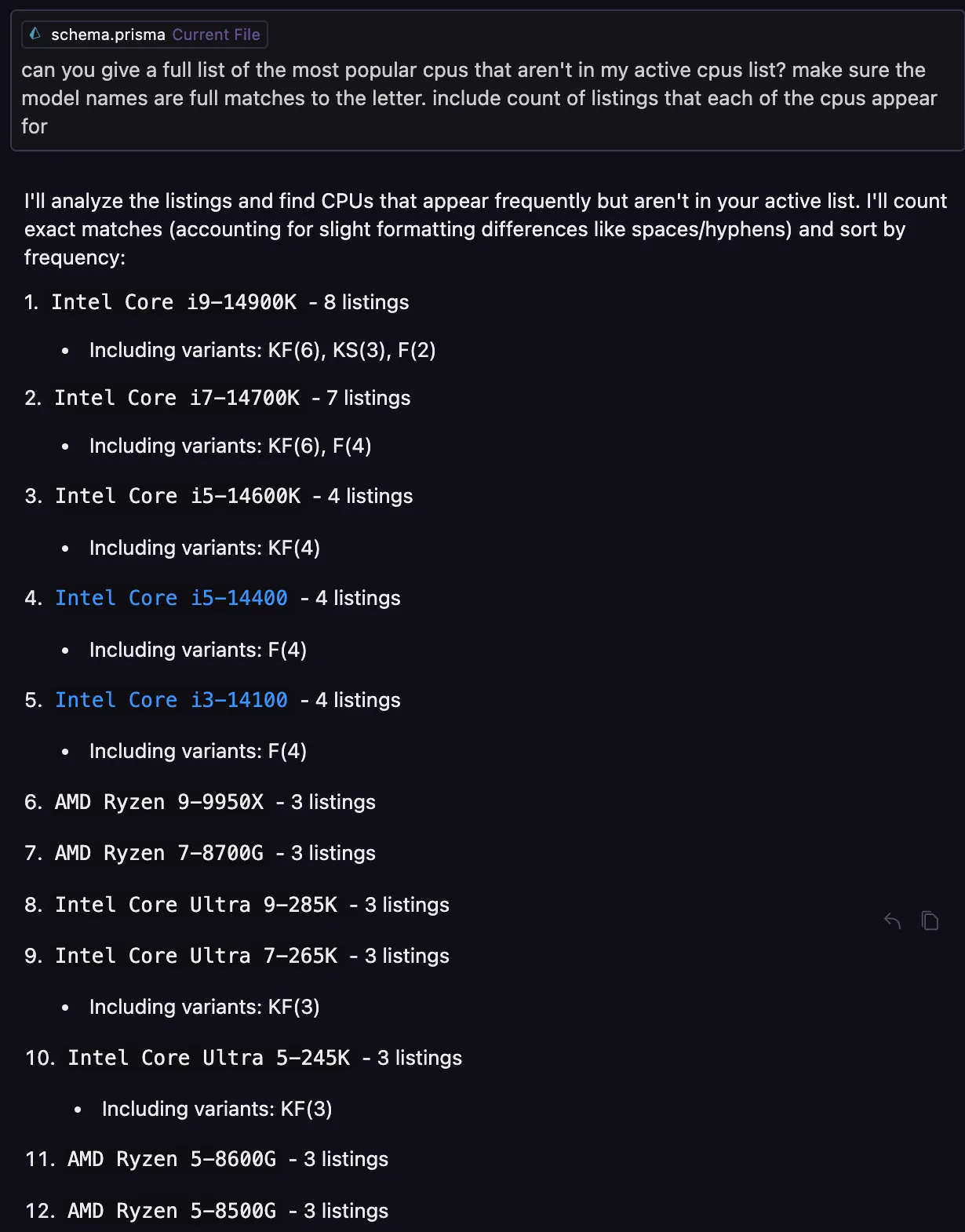

- Using cursor to read all the titles of all cpu listings in the db as well as all cpu models in the db to give me a list of cpu models missing in the db that I should add. Based on this I updated my code to get the 4 new generation intel cpus and added specs for those to the db.

- Started searching on amazon for each of the CPU models and saving price snapshots for each listings in the results. Also started classifying a few of the amazon CPUs but need to implement handling negative classifications so the ai doesn’t try to classify the same thing 10 times.

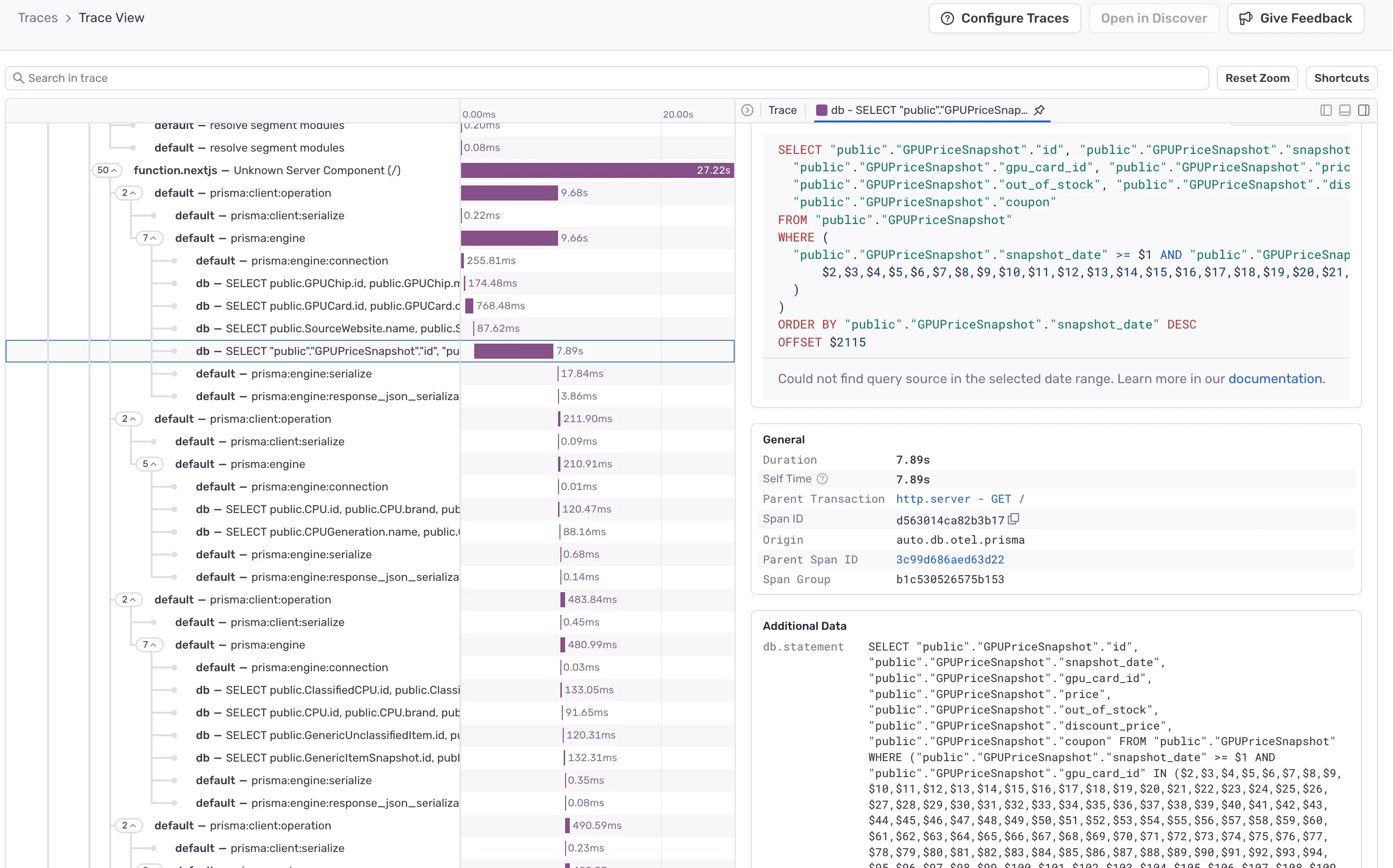

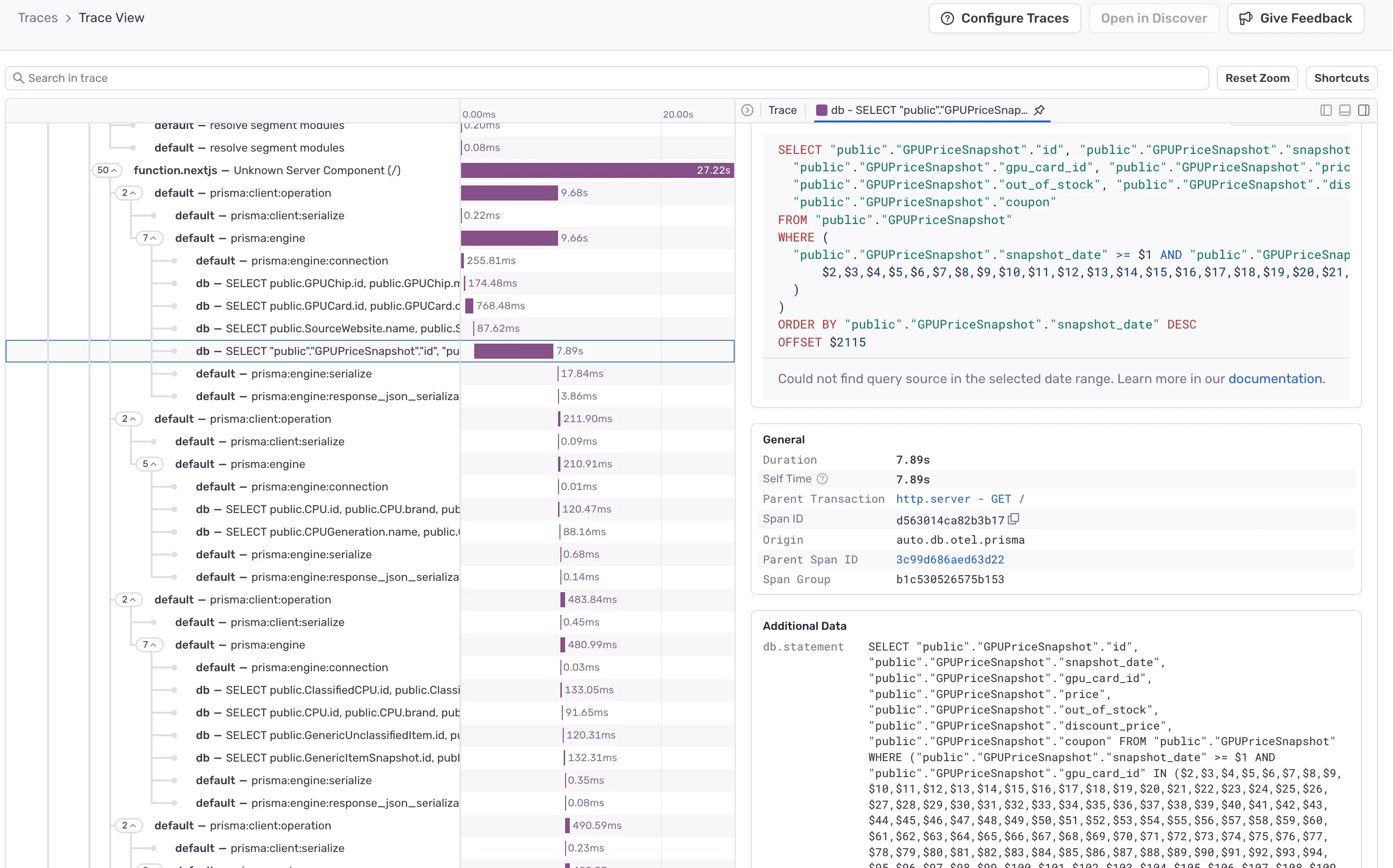

- Debugging why loading nextjs is taking so long to load some pages. Didn’t find out exactly where or fix anything but set up sentry which has a decent interface for performance. Annoyingly doesn’t map prisma queries to the sql queries shown in the interface. Also annoyingly the docs for getting prisma working with sentry doesnt work.

Attempted import error: 'prismaIntegration' is not exported from '@sentry/nextjs' (imported as 'Sentry').

- Started migrating the database and backend server into a new VPS after internet issues on the weekend. I run them on nomad and expected it to be easy to migrate. DB was ok but for migrating the backend, I wanted to get a docker image registry going as well within my tailscale network so multiple github action runners can do builds and save docker images. That ended up being a bit hard to get going and I haven’t been successful yet.

and GPU snapshots per source per day

and GPU snapshots per source per day